Benchmarking Large Codebase Search with Cursor, Windsurf, Claude Code, Copilot, Codex, Augment, and Jolt

We benchmarked the code search speed and quality of today’s top AI coding tools on open-source codebases, ranging from 780,000 to 4.7 million lines of code.

This post was updated on July 18, 2025 to add results from Augment Code.

Code search sits at the core of every AI coding tool. We cannot stuff entire codebases into an LLM’s context window. Today’s largest 1M context windows only fit ~50K lines of code1, and codebases typically exceed that in less than 6 months. Even if LLMs had infinite context, their speed and recall accuracy degrade significantly as the input length increases2. A thorough and precise search is mandatory for fast, accurate LLM responses.

To measure how coding tools stack up, we benchmarked Cursor, Windsurf, GitHub Copilot, GitHub Copilot Agent, Augment, Claude Code, OpenAI Codex, and Jolt. Using six closed pull requests from the Django, Grafana, and Kubernetes codebases, we measured search speed, thoroughness, and accuracy. These are large, complex, and well-known codebases, and their PRs represent real-world programming tasks. Each PR was run four times across all eight tools, resulting in a total of 192 runs.

This benchmark isolates the search layer and ignores code-generation quality. Each tool ultimately calls the same foundation models (Anthropic, Google, or OpenAI), so code generation is largely similar. Real differentiation comes from the user experience and the search. We focused on search here and scored it on three criteria:

- Speed: turnaround time to return code context. A faster search results in faster response and iteration speed for both humans and agents.

- Thoroughness: does the tool surface every important file.

- Accuracy: does it avoid pulling in irrelevant files.

These three metrics capture the critical aspects of an effective code search, which delivers the highest quality answers.

A brief history of code search

The most popular code search techniques are text search, vector search, and agentic search. Recently, many AI coding tools have either demoted or abandoned vector search in favor of agentic search. Here’s a snapshot of each method:

| Search Method | Description | Pros | Cons |

|---|---|---|---|

| User-specified context | An early and lazy approach, where the users manually specify relevant files and folders. | - Gives user full control over context | - Presents an undue burden on users - Tedious for large codebases, and impossible for unfamiliar codebases |

| Text | A strict or fuzzy text matching search. It’s fast, but is rarely useful in any real-world scenarios. Occasionally, it’s paired with AST parsing or dependency graphs to pull in interdependent files. | - Sub-second latency, negligible cost | - No semantic understanding, you must already know symbol names - Misses indirect references and renamed identifiers |

| Vector | The default for many RAG workflows. Codebase files (or their chunks) are embedded and stored in a vector database, and then a query finds the vectors most similar to the user’s prompt. | - Very fast look-ups, < 3 s after indexing - Cheap at query time | - Accuracy degrades quickly on codebases over 50K lines - Cannot “reason” about planned changes - Requires separate ingestion and a vector store - Requires indexing, which can take minutes to hours depending on codebase size and ingest engine |

| Agentic | An LLM-driven loop that utilizes ls, find, grep, rg (ripgrep), or similar commands, refining its target set and keywords across iterations. It’s more accurate than vector search, but often turns into a game of guess-and-check as the agent exhausts search term ideas. | - Accurate on 10M+ line codebases - No indexing required | - Search time balloons if initial guesses miss, 2-5 min was common during testing - Expensive due to multiple model calls and high token usage, > $0.8 per search was common during testing - Requires a terminal to operate, either locally or in a cloud sandbox |

| Hybrid semantic (Jolt HyperContext) | One-shot pipeline that combines structural analysis (AST + import graphs), text search, LLM semantic understanding, and LLM scoring. | - Accurate on 10M+ line codebases - Fast, 7-15 seconds - No indexing required - Cheaper than agentic search - Scales horizontally, e.g., a 2M line search takes about as long as 10M lines | - More expensive than pure text or vector look-ups - A few seconds slower than text and vector search |

Benchmark methodology and considerations

Codebases and prompts

We ran the benchmark against three open-source repos: Django (780K lines), Grafana (2.8M lines), and Kubernetes (4.7M lines). For each repo, we chose two recently closed pull requests. The PRs had a clear, self-contained feature or bug-fix goal, avoided routine maintenance chores, and crucially, did not explicitly name the files involved. Success depends squarely on each tool’s ability to discover the relevant code through search.

Every pull request was tested with two prompt variants, identical across all tools:

- List Files variant: ask to list files that should be modified in the PR

- Codegen variant: ask to generate code changes for the PR

Each variant was run twice per tool (168 runs in total) to smooth out the inherent randomness of LLMs, giving us a more balanced view of search speed, thoroughness, and accuracy.

Measuring speed

We captured two speed metrics for every run:

- Search Time: the interval between submitting the prompt and the end of the search phase. For agentic tools (Cursor, Windsurf, GitHub Copilot, Copilot Agent, Augment, Claude Code, Codex), this point occurs when the initial phase of search, ls, grep, and ripgrep finishes and the tool begins composing a response. For Jolt, internal telemetry marks when the search completes and generation starts.

- Total Response Time: the interval from prompt submission to the final token of the assistant’s reply.

Note: OpenAI Codex starts a sandbox container and clones the repo before searching; that startup time is included in both time metrics.

Search quality scoring

We first graded each tool’s output with the point-based rubric below. For the List Files prompt variant, we scored the list of files the tool planned to change. For the Codegen prompt variant, we scored the actual files the tool modified in its response. In both cases, we checked against the files that were ultimately merged in the PR, which serve as the ground-truth reference.

Primary files are files changed in the PR with the most important code or logic changes. Secondary files also require changes, but are ancillary to the core changes. Documentation, test, and example files are neutral and do not affect the score.

| Type of File | Points |

|---|---|

| Primary file correctly listed | +1.0 |

| Secondary file correctly listed | +0.5 |

| Missing primary file | -0.25 |

| Missing secondary file | 0 |

| Unnecessary file | -0.25 |

| Folder path instead of file | -0.5 |

| Hallucinated / non-existent file | -0.5 |

After tallying the raw points for a run, we divide that total by the maximum points possible for that PR and map that percentage to the 0-to-3 scale shown below, making results comparable across PRs of different sizes.

| % of Total Possible Points | Search Score | Interpretation |

|---|---|---|

100% | 3 | All required files found; minimal extraneous files |

60-99% | 2 | Most required files found; minor misses or noise |

40-59% | 1 | Missing critical files |

< 40% | 0 | Few or no relevant files |

A score of 2 or 3 is a pass. Scores below 2 indicate that the tool’s search failed.

Tool settings

The following settings were used for each tool:

| Tool | Tier/Plan | Model | Specific Settings |

|---|---|---|---|

| Cursor | Pro | Sonnet 4 (Max mode) | Internet use disabled Waited for codebase indexing to be done |

| Windsurf | Pro | Sonnet 4 (BYOK "bring your own key") | Internet use disabled Waited for codebase indexing to be done |

| GitHub Copilot | Pro | Sonnet 4 | Ask mode was used for the List Files prompt variant Edit mode was used for the Codegen prompt variant Each prompt was run with #codebase |

| GitHub Copilot Agent | Pro | Sonnet 4 | Copilot's "agent" mode |

| Augment | Developer | Default (unlisted, likely Sonnet 4) | Waited for codebase indexing to be done (took ~9 min for the Kubernetes repo) Agent mode Auto tool usage was enabled |

| Claude Code | BYOK | Sonnet 4 (Default model) | Default settings |

| Codex | Pro | o3 codex-1 (Default model) | Ask mode ("fast mode") was used for the List Files prompt variant Code mode was used for the Codegen prompt variant |

| Jolt | Team | Default | Default settings |

Note: The memory of each tool was cleared between runs to prevent memories from steering or short-circuiting the search process.

Additional considerations and notes

We designed this benchmark to be as objective, transparent, and repeatable as possible. The notes below outline the guardrails we used to keep results fair.

Each run was timed manually when internal telemetry was unavailable. When an agent paused for user confirmation, we replied in under a second and excluded that pause from the timing. To isolate the code search, we stopped the clock once a tool started running unit tests, linting, or self-verification. In a few cases, we had to adjust the prompt to prevent the agent from running tests or scripts.

We marked the search phase as complete when the initial pass of ls, grep, ripgrep, and other search-related commands ended. If the agent later realized it was missing information and launched additional searches, that extra time did not count toward Search Time. Therefore, some reported Search Time values for agentic tools are conservative. These controls aim to give a real-world view of how quickly each tool can surface the code a developer needs.

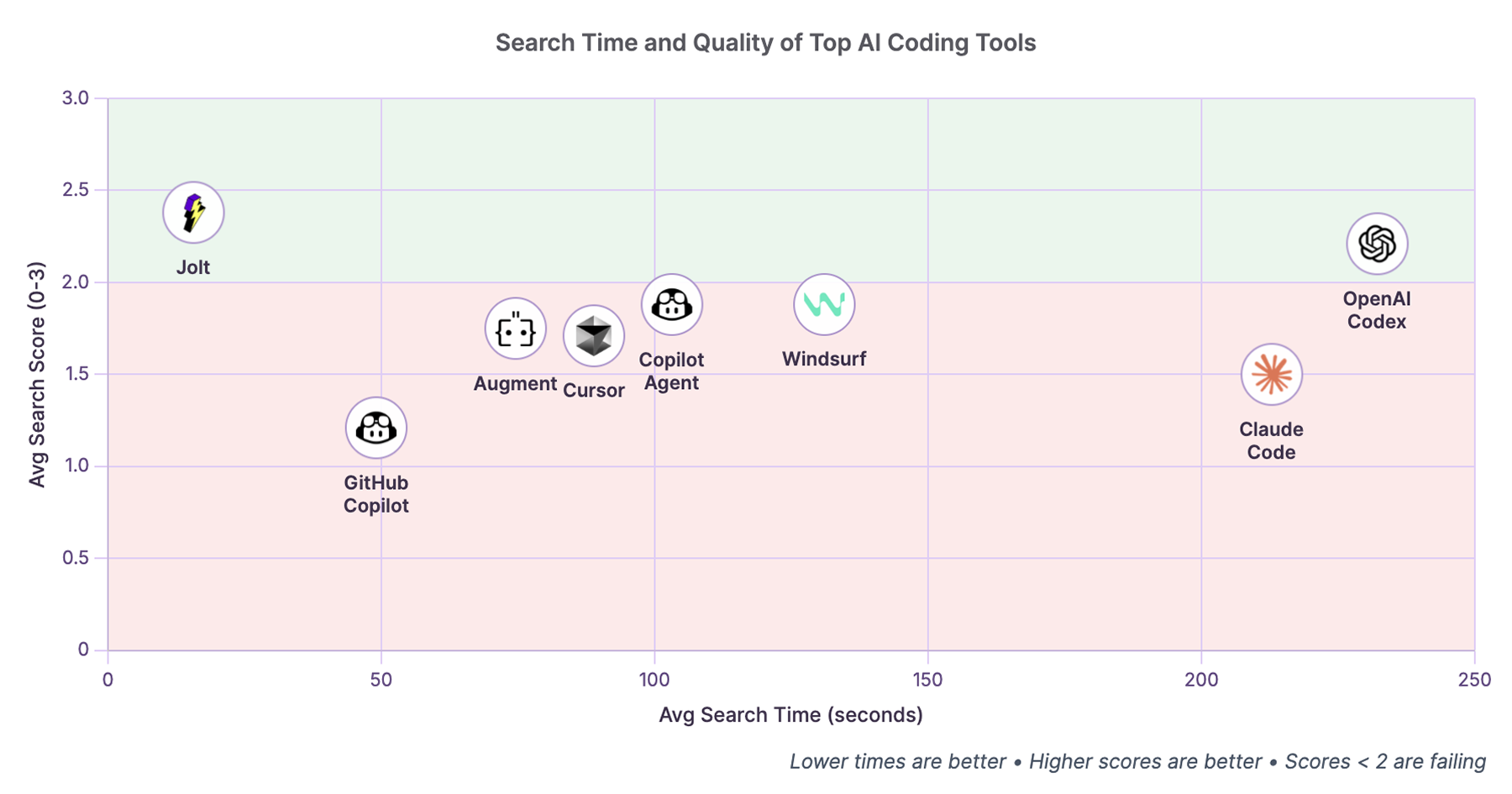

Result summary

| Rank | Avg Search Score (0-3) | Avg Search Time (sec) | Avg Total Response Time (sec) | Search Technology | |

|---|---|---|---|---|---|

| Jolt | 1 | 🥇2.38 (pass) | 🥇15.67 (1x) | 🥇58.04 (1x) | hybrid semantic (LLM + AST + text) |

| OpenAI Codex | 2 | 🥈2.21 (pass) | 232.17 (14.8x slower) | 291.92 (5.0x slower) | agentic |

| GitHub Copilot Agent | 3 | 🥉1.88 (fail) | 103.17 (6.6x slower) | 173.83 (3.0x slower) | agentic + vector |

| Windsurf | 4 | 🥉1.88 (fail) | 131.04 (8.4x slower) | 197.29 (3.4x slower) | agentic + vector |

| Augment | 5 | 1.75 (fail) | 74.58 (4.7x slower) | 223.25 (3.8x slower) | agentic + vector |

| Cursor | 6 | 1.71 (fail) | 🥉88.88 (5.7x slower) | 🥉161.88 (2.8x slower) | agentic + vector |

| Claude Code | 7 | 1.50 (fail) | 212.88 (13.6x slower) | 317.75 (5.5x slower) | agentic |

| GitHub Copilot | 8 | 1.21 (fail) | 🥈49.08 (3.1x slower) | 🥈101.75 (1.8x slower) | vector, maybe partially agentic |

Detailed results table at the end of the post

Jolt's hybrid search was the clear speed leader and surfaced the essential files in most runs. It is the only tool with a passing score and sub-minute average Total Response Time.

OpenAI's Codex nearly matched Jolt on recall, but relied on a fully agentic loop, resulting in average Search Time and Total Response Time that were 14.8x and 5x slower, respectively. Claude Code's speed was similar to Codex, but the search quality was significantly worse, as the tool either missed important files or suggested irrelevant ones.

Several of the agentic tools read parts of files in 100-200 line chunks, rather than read the entire file at once. This contributed to more LLM calls and higher latency, particularly for Claude Code. Pure vector search (GitHub Copilot non-agent modes) returned quickly but often missed important files and returned irrelevant results, illustrating the precision limits of vector search.

The hybrid agentic and vector search tools, Copilot Agent, Windsurf, Cursor, and Augment, had similar results, likely because they defer the workflow to the LLM, rather than prescribe process. There is little differentiation between the four, but Copilot Agent's had a slight edge.

Code search matters for humans and agents

This benchmark reveals a stark reality: the difference between the fastest and slowest tools is the difference between waiting 1 minute and waiting 5 minutes for a complete response. For a developer submitting 20-30 AI prompts per day, this translates to either 20-30 minutes or 1.5-2.5 hours spent waiting. That's the difference between maintaining a productive flow state and constantly context-switching.

The results also expose a critical trade-off in today's tools. Pure vector search (GitHub Copilot) delivers sub-minute responses but fails to find the right files. Agentic search (Codex, Claude Code) finds more relevant code but takes 3-5 minutes per search. Only Jolt’s hybrid approach delivers both speed and accuracy.

For autonomous agents, these differences compound further. An agent completing a complex PR might perform 50+ searches. With Jolt's 15-second search, that's 12.5 minutes of search time. With Claude Code's 3.5-minute average, it's nearly 3 hours. This directly impacts both infrastructure costs and shipping velocity.

These results demonstrate that Jolt’s hybrid semantic search delivers both the speed and accuracy developers need. Code search has been our core focus. We’ll continue to refine Jolt and deliver the best possible AI coding experience for production codebases. You can try Jolt for free here. Have questions or feedback about this benchmark? Reach us at hello@usejolt.ai.